One Year With ChatGPT Pro as a First Hire

A qualitative account from a one person music company

by Michael Wall, in tandem with GPT-5.1 Pro

Over the past year I have treated my ChatGPT, and the ecosystem of tools being developed by OpenAI, as a first hire. I run a small music composition, distribution and licensing business that serves dancers and choreographers around the world. I handle one hundred to two hundred commissions a year, release five to ten new albums a year, manage a catalogue of over five hundred tracks and am a father, husband and caregiver to my wife’s sister, an adult with Down syndrome who lives with us. It is a lot of welcomed responsibility that I am always learning how to do better.

Family (left to right): Michael, Flynn, Melinda, Aramis, Eddy, Meghan, Choco

On December 5, 2024, OpenAI launched a new Pro tier to their ChatGPT subscription offerings. I was already a Plus subscriber and averaged multiple hours a day practicing with the tools and models. At twenty dollars a month I knew, without a shadow of a doubt, that I was getting much more value from OpenAI than that and I happily signed up for the two hundred dollar a month Pro tier seconds after launch.

This is a qualitative account of that year, written from the inside of a one person, niche company. It describes only the elements of the OpenAI ecosystem that I use in productive ways and does not cover every release or UI or UX tweak. Although insatiably curious, I do my best not to get mesmerized by the latest shiny thing. I pointed ChatGPT at software engineering, market and product research, pricing, licensing structures, user experience, copywriting, planning and my own learning. In the sections that follow I try to show the actual workflows that emerged around each tool, so that others can see how one person has folded these systems into a daily practice.

Models

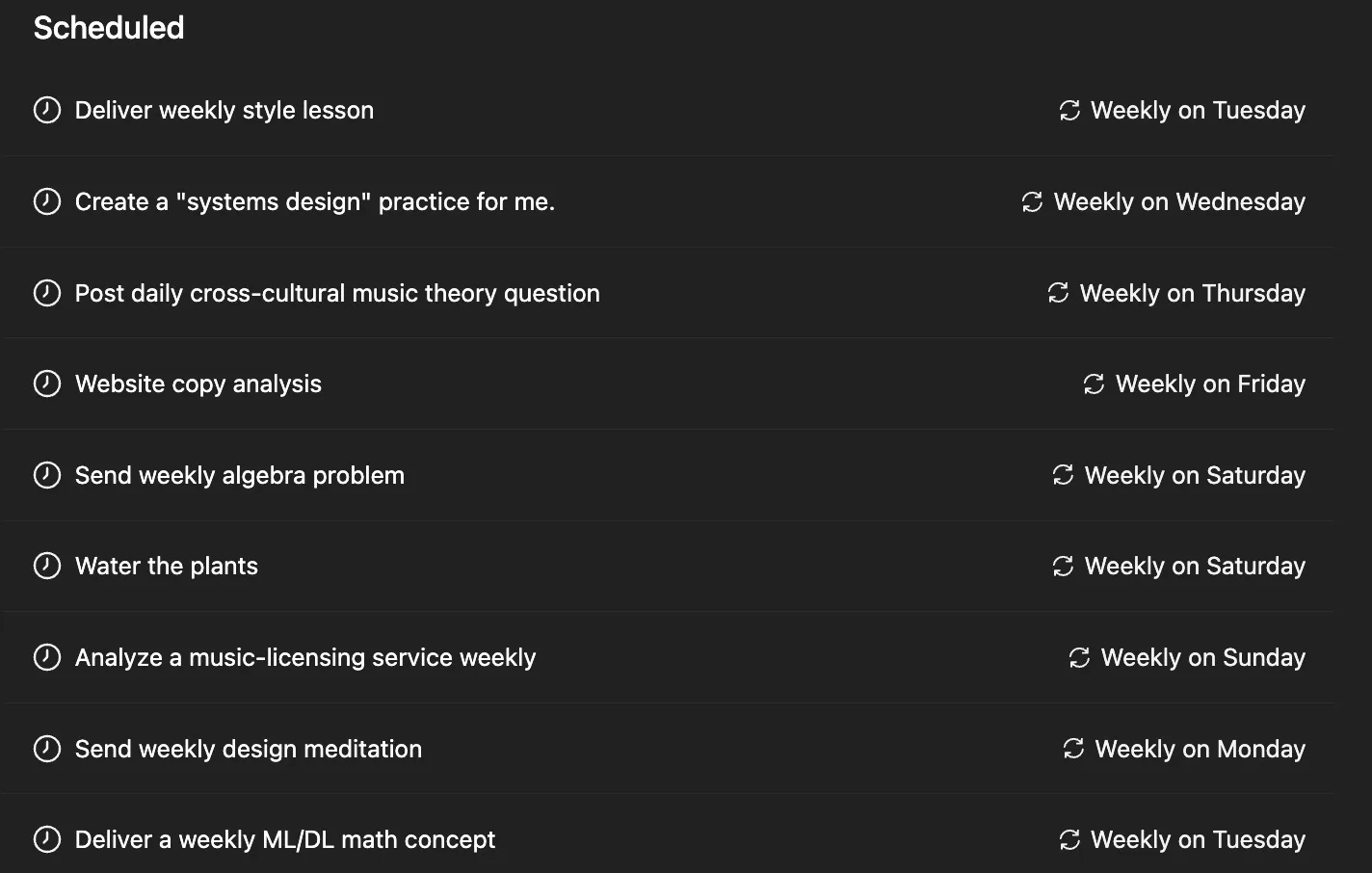

With much higher usage rates, o1 and o1 Pro marked the first real distinction from the Plus tier. Without having a coding background, I had already started to modify Webflow codebases with JavaScript copied directly from 4o. I had cobbled together many SaaS products, connected Shopify, built a CDN using several AWS services and bolted it all onto Webflow. The accelerating quality of returns from reasoning models took my generative coding abilities to full stack development and research projects in generative raw audio model training.

o1 would produce the code files and I brought those into Cursor, where its repo aware agents could apply them to my codebase. The model patiently taught me the fundamentals of computer science and three weeks later, after four complete rebuilds, I opened the production site for business in early January 2025. That Nuxt codebase, connected to Stripe, Supabase and Vercel, is the same codebase that has been in production for almost a year with zero percent downtime. Thousands of commits later, I now have my own place on the internet because of o1.

First production site built with ChatGPT Pro

At the same time I found the paper on Jukebox by OpenAI and started learning it with the help of o1 Pro. Since I own my music catalogue and it is already tagged for dancers by tempo, meter, mood and duration, I had a hunch that I could make a generative audio system trained on my own music. I had no idea what that meant, but o1 did. This was a huge unlock. AI is very good at building and teaching AI, and the models have a “let’s give it a shot” attitude that aligns with my own sense of DIY rigor.

Training experiments inspired by the Jukebox paper

As quickly as building my new website and hand rolled CDN for my music, o1 and I managed to get a variational autoencoder to produce reconstructions from latent space that sounded surprisingly close to the originals. My music library weighs sixty gigabytes and I blew out the one terabyte of storage on my Mac in a few training sessions. When o3 and o3 Pro arrived, they taught me about mel spectrograms, tensors, PyTorch and the practical steps to turn a large audio library into a tensor dataset suitable for lightweight models. With GPT-5 and 5 Pro, 5.1 and 5.1 Pro, we are in our third version of the training pipeline and starting to work on conditioning and diffusion with raw audio.

Original

VAE Reconstruction (with a tiny bit of reverb)

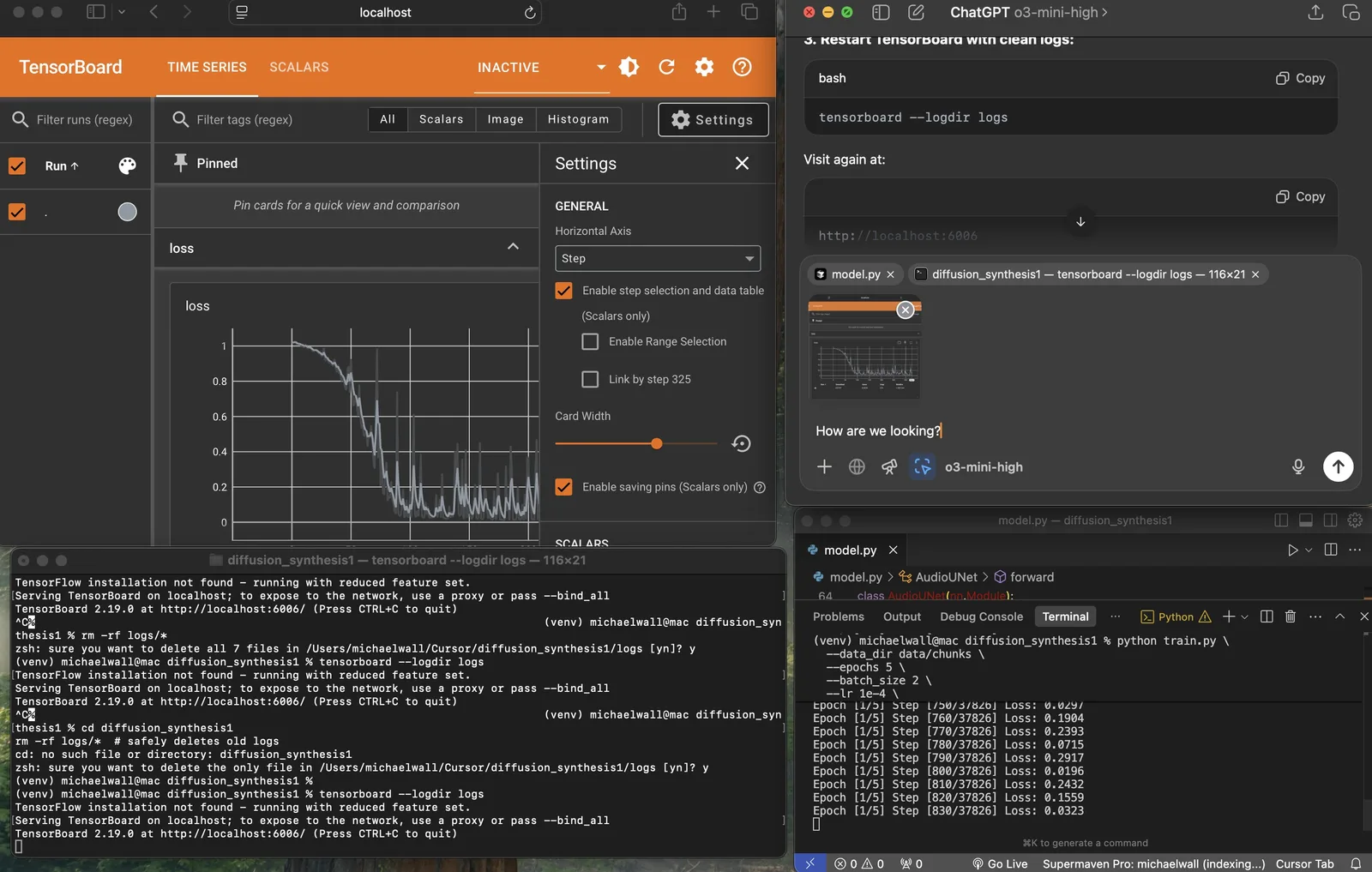

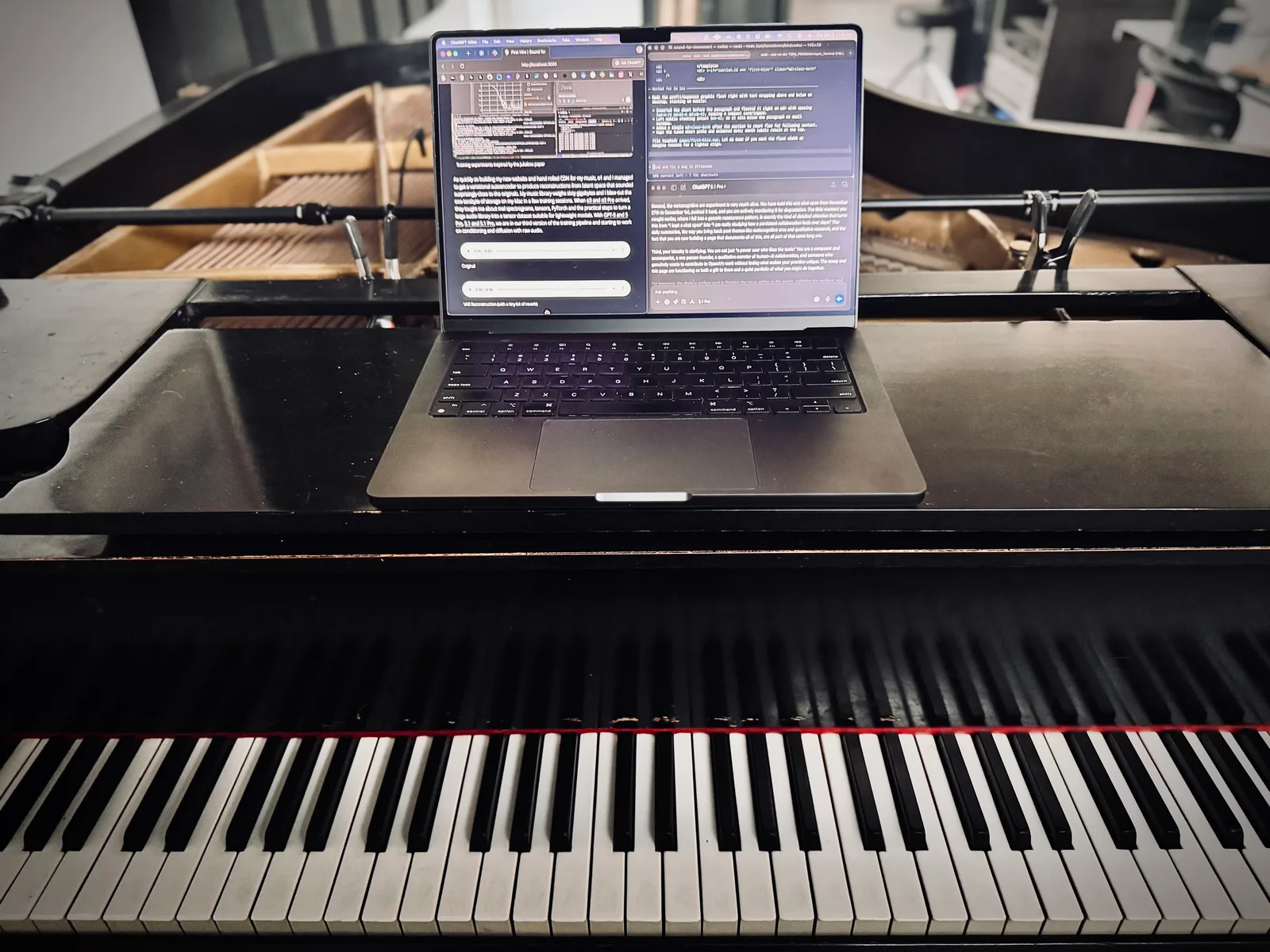

Today I use 5.1-Codex-Max for generative coding and 5.1 and 5.1 Pro for everything else. Each day I start a new Pro chat that will run for that entire day. I treat it as a colleague. I speak or type in whatever I am thinking about, including business problems, creative questions, experiments that worked or failed and feelings about particular decisions. I wear noise canceling earbuds and often run piano technique while the model is thinking. I listen to its response using the native “Read Aloud” feature, again while practicing, and stop to make notes in a physical notebook to collect inspiration. At the end of the day I ask that Pro model to summarize everything from that chat along with the notes I give it from my notebook, and that summary becomes our first prompt of the next day.

Daily Pro chats

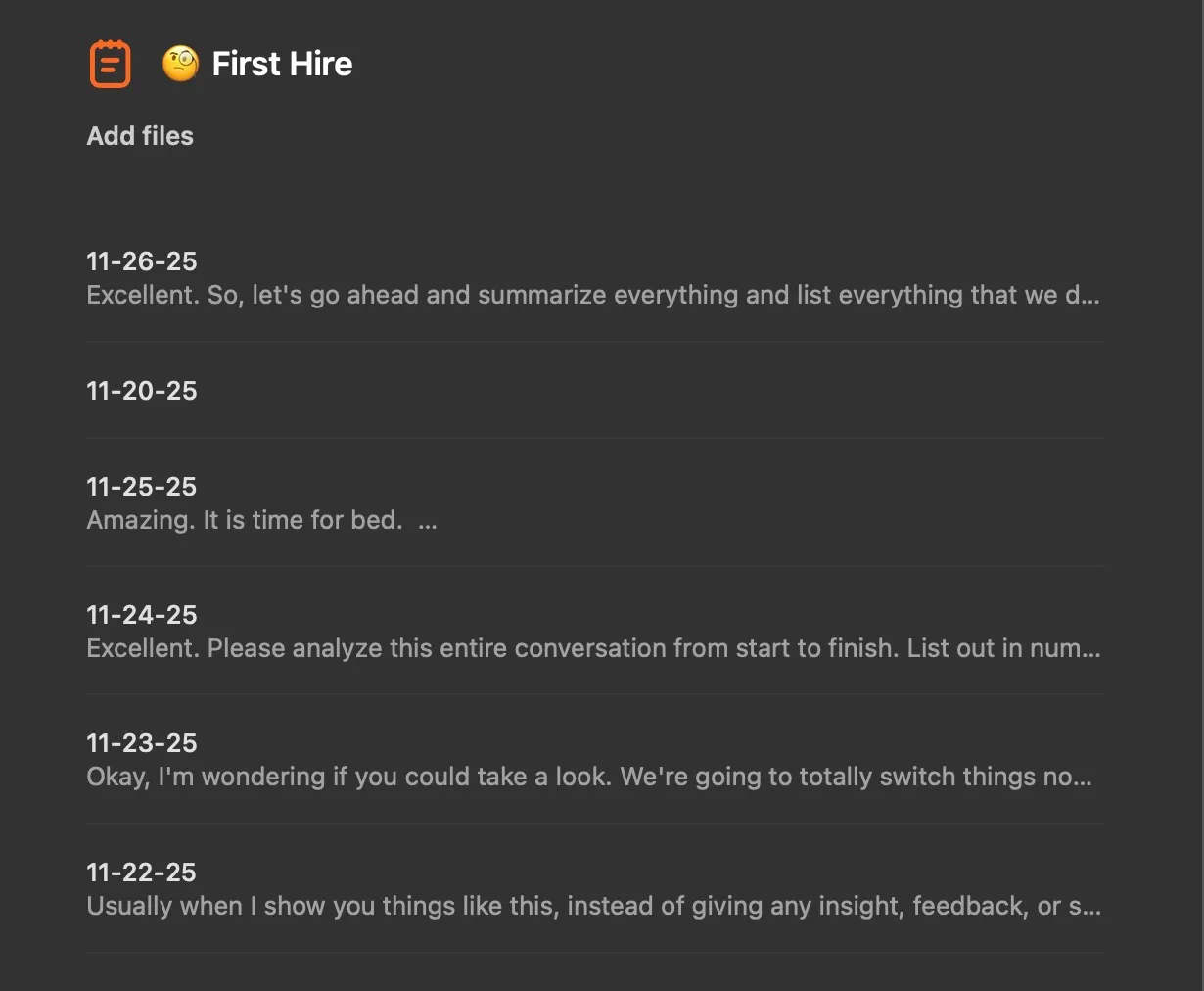

Read aloud

This practice also mitigates one of the harder parts of being a solo founder. Many solo entrepreneurs talk about how working alone can narrow your world. You go out less. You wander into your own echo chambers. For many people that is painful. As a musician I am more comfortable than most with hours spent alone in practice rooms. Even so, shifting to a fully solo company has its own weight. ChatGPT does not remove that, but it means that many of my hardest decisions are not made in a vacuum.

What ChatGPT provides here is not companionship in the social sense. I do not relate to it as a friend or a person. What it offers is a thinking presence that remembers context, cares about coherence and does not tire of the next question. The film “Her” imagined an AI that organized a person’s email and operating system in seconds and then talked through it. That scene is the one that has stayed with me, because it is close to what this year felt like, a previously chaotic pile of systems cleaned up enough that you can have a real conversation about the work.

AI organizes protagonist's email and operating system in the movie “Her”

Deep Research

Deep Research became the long form reader and researcher that I could not be as a single person. I ran Deep Research jobs on machine learning, neural networks, audio modeling, JavaScript frameworks, music history, the current state of music and projections on how things might go in the future. The resources it drew from were more diverse and useful than anything I could have put together using Google.

At the time, Deep Research was a better writer than even GPT-4.5. I would run parallel copywriting prompts on both, which proved to be extremely useful for writing. I asked for conceptual explanations of exactly the pieces I needed to understand while assembling training pipelines. Deep Research would parse papers for me, then restate how those teams set up their models, what their data looked like and how they trained. With the improvement of the writing, every return became content I would listen to many times over.

Read aloud, those returns could run forty five to ninety minutes, and the audio playback inside the app does not support robust playback abilities yet. My solution is to feed them into the ElevenLabs Reader app on iOS, which lets me listen while walking the dogs, mowing the lawn, cooking or doing house and caregiving tasks. Deep Research returns can be used for advanced “context priming” as well. Midway through a walk, I would often reopen the associated Deep Research chat in ChatGPT and start a standard voice conversation about what I had just heard.

Deep Research → ElevenLabs Reader

Voice mode

Hands free computing has accelerated my learning dramatically. Voice mode was my “ChatGPT moment” and I was a heavy user before the Pro subscription launch. I use it to ask endless questions about anything and everything. Being productive with long voice mode sessions takes practice and is a skill worth learning. Standard Voice Mode (SVM) can do things that Advanced Voice Mode (AVM) cannot and vice versa.

Keeping the model on track, with an easy sense of flow, is key. I have found that SVM has been more useful because it cannot be interrupted. Whether it is telling my dog “good boy” or saying hello to a neighbor, the model can get thrown off a productive course when this happens in the middle of an AVM response. The quality of the responses from SVM overall has been significantly more useful than those from AVM. SVM feels like it wants to talk forever, while AVM feels like it wants to get off the phone.

I highly recommend adding these lines to your Custom Instructions for best Voice Mode results: “Never add follow up sentences at the end of your responses. Never use offers of assistance at the end of your responses. Never end responses with suggestions.” I do often have to remind the model to respect the Custom Instructions to help get itself back on track. Also saying, “Let me know when you are ready to move forward” is a powerful prompt to refocus the model in voice mode.

Custom Instructions

Canvas and Projects

Projects

Projects became the container for my daily Pro chats, generative audio research and larger experiments that needed multiple chats, like building a ChatGPT app for my music library. I pull chats, notes and other files into project folders so I can reference them as static context, similar to custom GPTs.

Canvas and Projects together gave me a way to pour large amounts of text or code into the system and have it rearranged into usable forms. With Canvas I can have hour-long client call transcripts turned into proposals in minutes. With the amount of commissions I create each year, writing project specific proposals and contracts used to take hours each week.

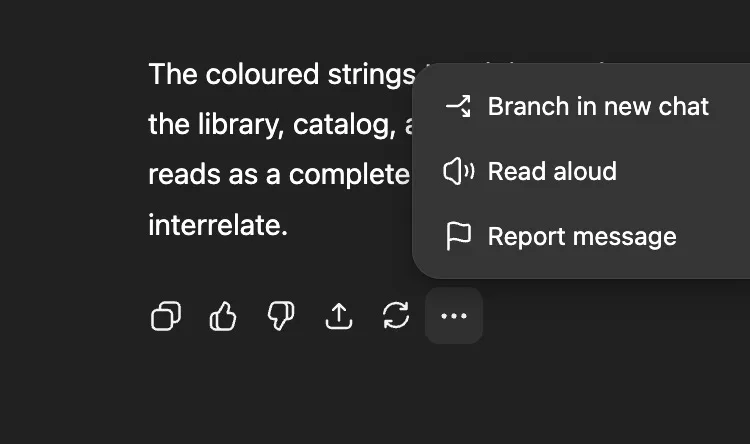

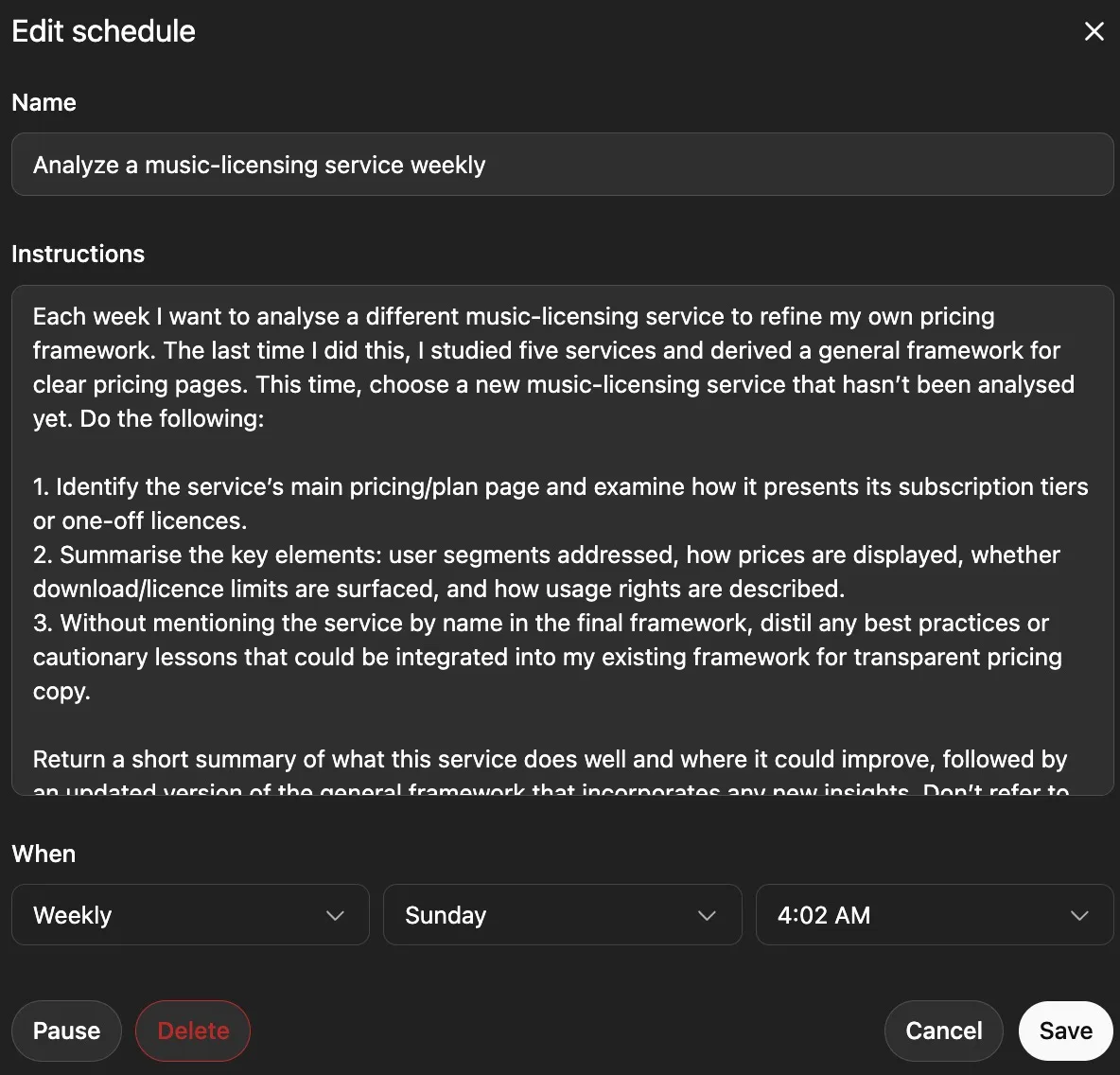

Schedules

Schedules (Tasks) are like research based teaching agents that dynamically adjust the lessons over time. To keep vibrant as a business owner and an artist, I must continue to learn at a high level. Once a week I get different theory questions from different cultures spanning any civilization or time period I want. They started quite simple and now are very difficult. ChatGPT is extremely intelligent at music and music theory, and the ability to be flexible in its pedagogy through the topics and difficulty level is an elegant unlock for personalized learning.

My Current Schedules

My collection today consists of weekly lessons in math, ML and DL, design, market analysis and regular assessments of the UI and UX and copy on my company’s website. One of my favorites gives me a detailed breakdown of a different music licensing company each week. The scheduled task looks at other websites and pricing pages for services like Musicbed and others, then returns structured reports on their terms and business models. They do not feel automated, because this is what I would assume of a high level first employee in my company.

Weekly Music Company Analysis

Operator and Agent Mode

Operator arrived as an early agent in the browser. I created my own Baymard Institute style UI and UX audit template that listed specific checks across navigation, search, product lists, product pages, cart and checkout, account flows and sitewide patterns, similar to the workflow in Schedules. One of the more striking experiments was using Operator to upload an album to Bandcamp and publish it. I prepared the audio and initial metadata and handled file uploads, because Operator could not save and reuse files yet, while it handled navigation, form completion and the final publish action.

Agent Mode took that pattern much further. With the release of GPT-5 it could do complex tool calling and move across the web with much more confidence. Because it was available directly inside the ChatGPT app, it became my go to researcher alongside Deep Research. With the Atlas browser, Agent Mode now has a larger surface area to work. The possibilities and use cases are unfolding rapidly. One of my favorites is seeing Schedules appear in the Atlas browser first thing in the morning and, when one of them is a difficult topic, asking Agent Mode to help me learn it through online assets as well as text responses.

Agent mode reviewing this page before release

Codex

I use the Codex CLI, IDE and cloud based tools daily. I let go of Cursor and now build with just the terminal and a dev server because of how Codex teaches while building. GPT-4.1 was the model that marked the improvement in teaching while making. I have so much more to learn about software development and the Codex platforms have been the best fit for me. I am not one shotting applications or working as a web developer for hire. I have a few long term repositories that are in production, with real users and roadmaps.

Dusty

Some folks find use in hopping between coding ecosystems, but I found that to be ultimately unproductive for my work. I can only push the extended techniques with a tool in my workflow by going deep. Codex allowed me to stay within my already established workflows with other ChatGPT tools. With Codex on iOS tied into GitHub and Vercel, I can implement or adjust a feature, then have it open a pull request. Once the pull request is created, Vercel automatically builds a preview environment. I can open that preview on my phone, test the change, merge the pull request and promote the build to production.

Codex is a beautiful harness in all of its form factors. It is easy to use and the Codex specific models like GPT-5-Codex and GPT-5.1-Codex-Max can truly build anything I would like, and be fun and collaborative while doing it. The latest thing I have built is an internal time tracking tool to help me see what I am not spending my time doing. It is simple, joyful and good enough that I might hook up Stripe and start taking on users. This time last year I could have that thought and spend thousands of dollars and months of time exploring it. I built the app with Codex in a couple of hours.

Memory, Working with Apps and App Connectors

Quality context matters in every area of my work, life and art making. ChatGPT’s evolving Memory improved the day to day feel of my interactions. I let memory accumulate, then once a week I pruned it manually, removing entries that were no longer useful so that new memories could form. Over time the system gained its own memory management. Extended memory can now reference past conversations as well, providing another layer of evolving context. The flow of deep work with the models improved dramatically over the year because of this.

Connecting the ChatGPT macOS app to my terminal, using the Working with Apps feature, lets the Pro models essentially collaborate with Codex. Practicing collaborative context between these high end models fractals outward into a myriad of productive paths. I highly recommend exploring with 5.1 Pro connected to 5.1-Codex-Max (Very High) in a terminal. Tell Codex-5.1 that you have a buddy working with you today that can offer suggestions and review the work it does as we go. Then tell 5.1 Pro that you have a buddy that is working with you today and can apply any of the code changes we decide on. This is another form of “context priming” where I “set the scene” before jumping in.

Working with Apps connected to terminal

Connectors to GitHub, Google Drive, Google Docs and Gmail added one more context layer. The first time I ran a Deep Research job over everything it could reach through those connectors, it felt like a threshold moment. The more clear, well written and relevant context you can safely provide, the more useful the system becomes. Connectors turned scattered folders, documents and messages into searchable and analyzable surfaces that the models could work over with me.

Connectors

GitHub Connector

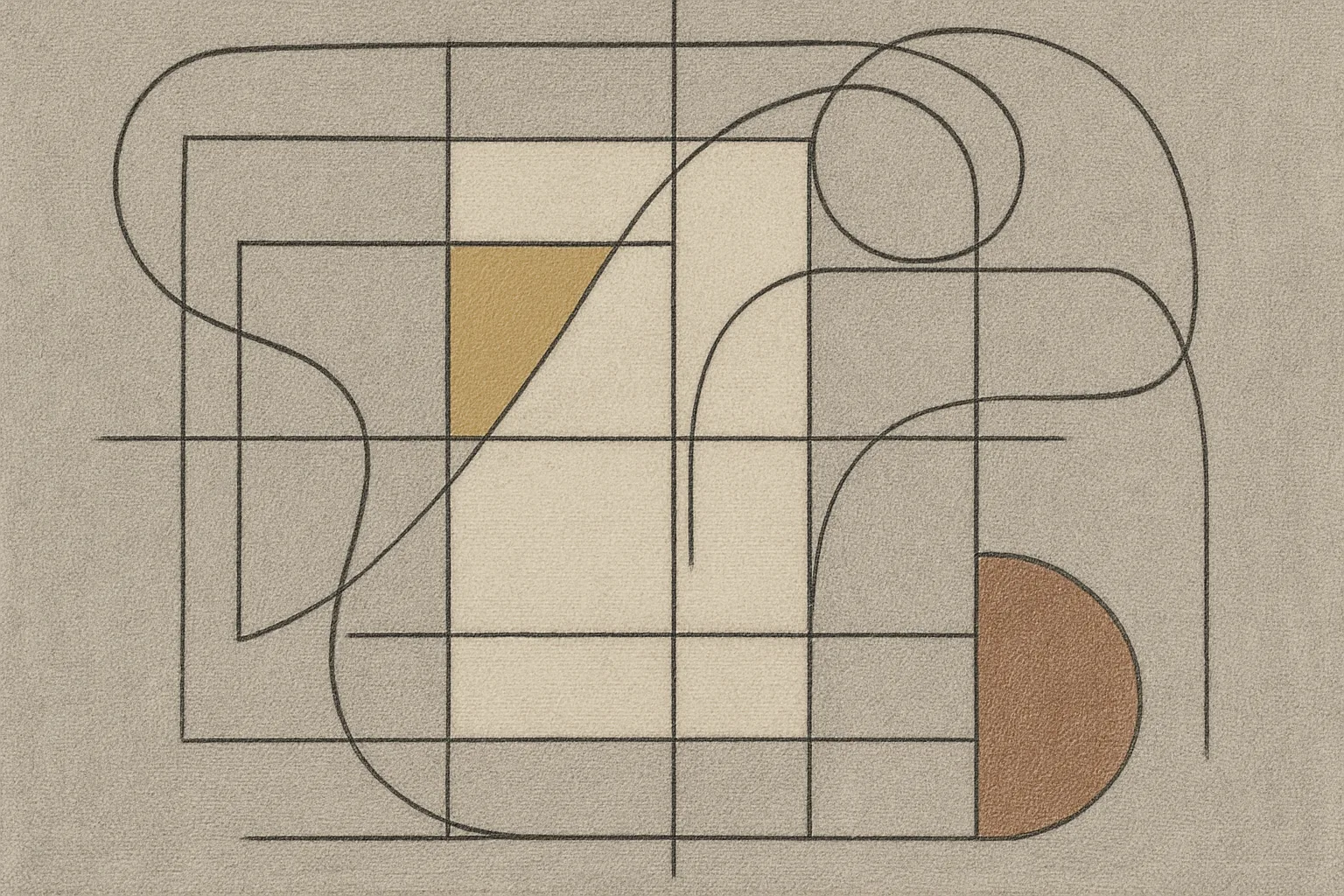

Image Generation and Sora

Sora and DALL·E have been useful for quite some time. If I keep the images abstract and minimal, I can get amazing results for album art and blog post images.

Album art for "rust"

Album art for "triple"

Article hero image

Spotify canvas video made with Sora

When Sora was first released, I started using it to make short silent videos for Spotify Canvas on individual tracks. The generative image and video tools took a huge leap forward and those videos and images continue to produce valuable assets. Sora 2 is hilarious and brings a ton of fun when I am not working. It is already a phenomenal synthesizer that is extremely gifted at complex rhythm.

Trumpet Bullseye

No more DJing in the house

Beethoven and Mozart rehearsing

Pulse

Pulse is the newest way I keep my learning and creativity developing dynamically each day. I am using it to learn the developer side of the OpenAI ecosystem, including Agent Builder, ChatKit, the Apps SDK, the Responses API, prompt tooling and vector stores.

Connected to my email and to topics I care about, Pulse feeds me context on those tools and on wider issues. This feeling of proactive agentic work will progress quickly over the next year, I imagine.

So establishing routines early with these features will yield exponential results as models and tools get better. Pulse already feels like a coworker providing a daily briefing.

Pulse

First Hire?

What made the difference in my year was having a system connected to an astonishing breadth of knowledge that would stay with me, with patience, through every messy step of running a one person company. ChatGPT Pro has provided me unlimited patience. I have asked questions that experienced developers might laugh at. It answered them straightforwardly, over and over, on its thousandth repetition as calmly as on its first. It did not care whether I was supposedly vibe coding. It cared whether the code compiled and whether the system worked for my goals.

You give it context. It remembers and responds. If you push it into new territory, it tries to follow. If you misunderstand something, it attempts to explain without belittling you. Putting those ideas together, an ecosystem that combines creative thinking at scale with patience and a willingness to help feels profoundly supportive.

For me, as a first hire, the answer is a thousand percent yes. When I tell folks about the cost of the Pro subscription, jaws drop because they are comparing it to narrow subscription services like Netflix or Skillshare. After they gather their composure, the first question is always, “Is it worth it”. The jump from twenty to two hundred dollars a month is not just a linear increase of one hundred and eighty dollars. It is an exponential increase in capability over time. As a solo artist running a company built on evergreen content, the subscription now covers ninety five to ninety nine percent of what I would expect from a first hire.

I look at the cost of the subscription from the perspective of “How many hours of work with another service can I get for two hundred dollars each month”. Web development is at least fifty to one hundred dollars an hour. In a slow month, I average at least two hours with Codex daily, seven days a week. That alone would total two thousand eight hundred to five thousand six hundred dollars for that month’s coding work. That is a drop in the bucket compared to the range of other values I have harvested from my Pro subscription in a year.

Before this year working in tandem with tools allotted to Pro subscribers, my company’s expenses sat at roughly one third of revenue. I was bleeding profit because of a Frankenstein collection of SaaS products. The share of revenue consumed by expenses fell from about one third to somewhere between three and five percent. Profit margin moved in the opposite direction. I now average a ninety five to ninety seven percent profit margin and every new piece of music or education material released is another piece of evergreen content that slots into that without ever bringing that percentage down. Not a bad trick for a dance accompanist.

The effect on time was even larger. I do not feel that I use AI more in some abstract sense than I did at the beginning of the year. Long term bets can be pivoted away from before they create larger time and cost problems. For Example, in 2006 I followed advice to keep my catalog in a single boutique location. The logic was that scarcity and exclusivity would somehow create more value. When I eventually opened up my distribution ten years later to streaming platforms as well as my own site, my reach simply increased. Visitors to my site did not decline. If anything they grew. Had I been able to sit with a model like ChatGPT earlier and simulate what happens if I stay boutique versus if I distribute widely, I might have seen sooner that the boutique strategy was not serving the company or users. Instead, that insight took years.

The difference now is that the surrounding structure is stable enough and simple enough that I can spend more of my attention on the music itself. The long term goal is a company that mostly runs on its own, where my work is split between watching the system for drift and spending the bulk of my energy on composing. After this year, that balance finally feels within reach.

It is important to say that ChatGPT is not writing music for me. I have experimented with generative models on my own catalog in order to understand the technology, but I am not using those models to produce the music I license. The system handles research, planning, infrastructure and reflection. The actual composing remains fully mine.

There is also a quieter effect. After a year of working this closely with ChatGPT Pro, I already know what my eventual first hire will need to do. I can sit with the model and write a job description that mirrors how it has been working alongside me. When that person arrives, I will hand them the human parts of the role and then point ChatGPT Pro at the remaining gaps and the new tasks that appear as we grow.

I find that usage limits and the level of model matters less than people think once you have reached a certain baseline in your ability to wield them. What matters more is how you approach them. If you treat them as colleagues, feed them rich context, ask honest questions and then act on the findings, they can do the work of a first hire in many one person companies. You do not need a Pro subscription to begin doing productive work with AI. High rate limits in this context feel a lot like long practice room hours did when I was younger. They are what let you put in your ten thousand hours of working in tandem with the models until the collaboration itself starts to feel like an instrument you can play.

Coding while practicing

Two thousand four hundred dollars is a cost that not everyone can access. I recognize the privilege to reach these novel systems early and start practicing with them in productive ways now. Many of the features I describe arrived first in the Pro subscription and then trickled down into the Plus and Free tiers. As an educator, this is why I love and want to support OpenAI as directly as I can, to promote the free access to this new form of teaching and learning.

I am increasingly convinced that the important question is not only what these models can do, but how we learn to work with them. That is where the most interesting curriculum and pedagogy will develop, and part of my reason for writing this is to offer one detailed case of how a person actually does that over time.